Polynomial regression with Accord.NET

This post shows a use of the PolynomialRegression class of the Accord.NET framework with the aim to demonstrate

that the classic polynomial approximation of machine learning can reach interesting levels of accuracy with extremely short learning times.

Although MLP (Multi Layer Perceptron) neural networks can be considered universal function approximators (see Fitting with highly configurable multi layer perceptrons on this website),

for some types of datasets, a classic machine learning regression supervised algorithm, such as the polynomial regression algorithm we are talking about here,

can reach acceptable levels of accuracy and with a significantly lower computational cost than that of an MLP.

To learn more about the approximation of a real function of a variable with an MLP see One-variable real-valued function fitting with TensorFlow and One-variable real-valued function fitting with PyTorch.

In the real world datasets pre-exist the learning phase, in fact they are obtained by extracting data from production databases or Excel files,

from the output of measurement instruments, from data-loggers connected to electronic sensors and so on, and then used for the following learning phases;

but since the focus here is the polynomial approximation itself and not the approximation of a real phenomenon, the datasets used in this post will be generated synthetically:

this has the advantage of being able to stress the algorithm and see for which types of datasets the algorithm has acceptable accuracy and for which the algorithm is struggling.

Implementation

The code described by this post is written with C#, it requires Microsoft .NET Core 3.1 and the Accord.NET 3.8.0 framework.

To get the code please see the paragraph Download of the complete code at the end of this post.

The most important class of the project is PolynomialLeastSquares1to1Regressor that is a wrapper of the

PolynomialRegression class of Accord.NET.

using System.Globalization;

using System.Collections.Generic;

using System.Linq;

using Accord.Statistics.Models.Regression.Linear;

using Accord.Math.Optimization.Losses;

using Regressors.Entities;

using Regressors.Exceptions;

namespace Regressors.Wrappers

{

public class PolynomialLeastSquares1to1Regressor

{

private int _degree;

private bool _isRobust;

private PolynomialRegression _polynomialRegression;

public PolynomialLeastSquares1to1Regressor(int degree, bool isRobust = false)

{

_degree = degree;

_isRobust = isRobust;

}

public double[] Weights

{

get

{

AssertAlreadyLearned();

return _polynomialRegression.Weights;

}

}

public double Intercept

{

get

{

AssertAlreadyLearned();

return _polynomialRegression.Intercept;

}

}

private void AssertAlreadyLearned()

{

if (_polynomialRegression == null)

throw new NotTrainedException();

}

public string StringfyLearnedPolynomial(string format = "e")

{

if (_polynomialRegression == null)

return string.Empty;

return _polynomialRegression.ToString(format, CultureInfo.InvariantCulture);

}

public void Learn(IList<XtoY> dsLearn)

{

double [] inputs = dsLearn.Select(i => i.X).ToArray();

double [] outputs = dsLearn.Select(i => i.Y).ToArray();

var pls = new PolynomialLeastSquares() { Degree = _degree, IsRobust = _isRobust };

_polynomialRegression = pls.Learn(inputs, outputs);

}

public IEnumerable<XtoY> Predict(IEnumerable<double> xvalues)

{

AssertAlreadyLearned();

double []xvaluesArray = xvalues.ToArray();

double [] yvaluesArray = _polynomialRegression.Transform(xvaluesArray);

for(int i = 0; i < xvaluesArray.Length; ++i)

{

yield return new XtoY() {X = xvaluesArray[i], Y = yvaluesArray[i]};

}

}

public static double ComputeError(IEnumerable<XtoY> ds, IEnumerable<XtoY> predicted)

{

double [] outputs = ds.Select(i => i.Y).ToArray();

double [] preds = predicted.Select(i => i.Y).ToArray();

double error = new SquareLoss(outputs).Loss(preds);

return error;

}

}

}

Code execution

On a Linux, Mac or Windows system where .NET Core 3.1 and git client are already installed, to download the source and run the program, run the following commands:

git clone https://github.com/ettoremessina/accord-net-experiments

cd accord-net-experiments/Prototypes/CSharp/Regressors

dotnet build

dotnet rundotnet runout folder nine folders are created, each corresponding to a built-in test case (see PolynomialLeastSquares1to1RegressorTesterclass);

each folder contains four files:

learnds.csv which is the learning dataset in csv format with header.testds.csv which is the test dataset in csv format with header.prediction.csv which is the prediction file in csv format with header.plotgraph.m for Octave.The file

prediction.csv is obtained by applying the $x$ of the test dataset to the regressor who learned the curve;

Note that the discretization step of the test dataset is not a multiple of the learning one to ensure that the test dataset contains most of the data not present in the learning dataset,

which makes the prediction more interesting.The script

plotgraph.m, if runned on Octave, produces a graph with two curves: the blue one is the test dataset, the red one is the prediction;

the graphs shown below were generated by running these scripts. Alternatively you can import csv files into Excel or other spreadsheet software

and generate charts by them; finally you can always use online services for plotting charts from csv files.The output of the program produces one output block for each of the nine build-in test cases; In particular, each block shows the polynomial it approximates and the mean square error calculated on the test dataset. Here is an example of the first output block:

Started test #PLSR_01

Generating learning dataset

Generating test dataset

Training

Learned polynomial y(x) = 5.000000e-001x^3 + -2.000000e+000x^2 + -3.000000e+000x^1 + -1.000000e+000

Predicting

Error: 9.42921358148529E-24

Saving

Terminated test #PLSR_01

Case study of polynomial approximation of a sinusoid

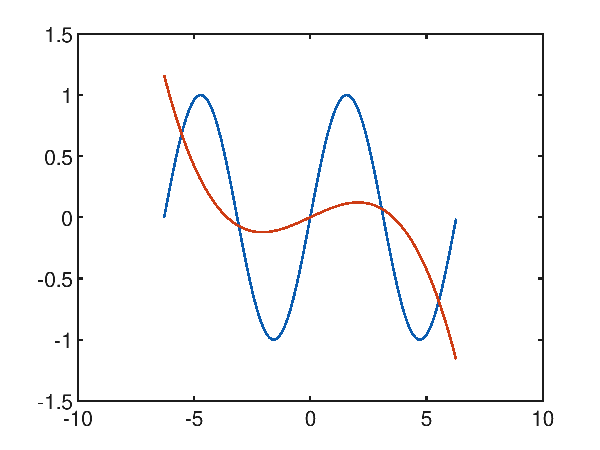

Given the synthetic dataset generated with a sinusoid $f(x)=\sin x$ within the interval $[-2 \pi, 2 \pi]$, the first attempt at approximation is made by attempting a third-degree polynomial; from this chart:

it is clear that the desired result is far from the desired one and has an average square error of 0.35855442002271176.

By reasoning empirically (attention: this reasoning is not applicable in general), observing that the function to approximate has 2 relative minima and 2 relative maxima in the range considered,

that is 5 traits in opposite direction of growth, it is observed that at least one polynomial of grade 5 is needed to realize these 5 traits in opposite direction of growth.

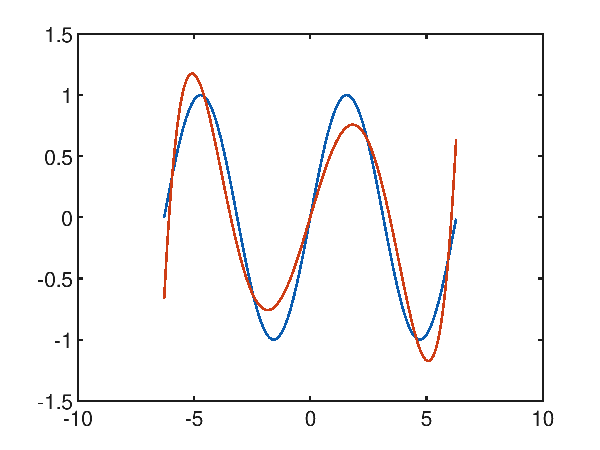

In fact, trying to approximate with a fifth-degree polynomial things are starting to get better:

and in fact the mean square error is 0.047802033570042826.

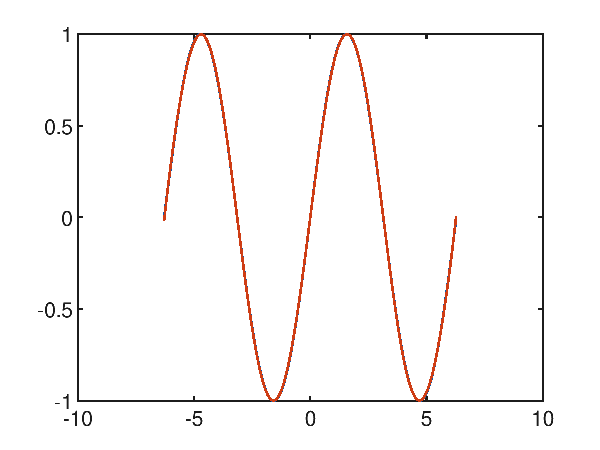

Raising the degree of the polynomial to 10, you can see that it is interestingly close to a very accurate approximation:

and in fact the mean square error drops to a very low value of 1.730499971068361E-05.

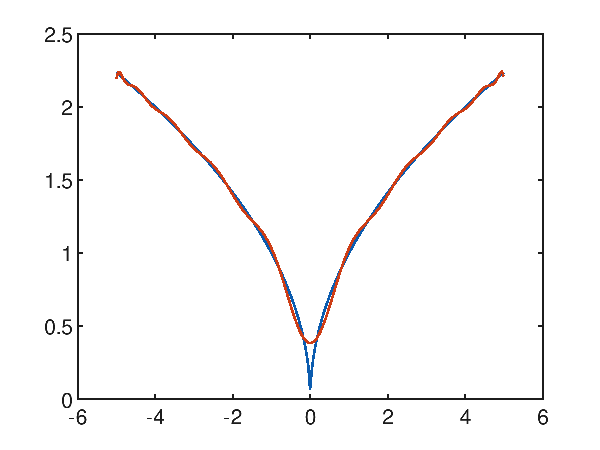

Case study of polynomial approximation of a function with a cusp

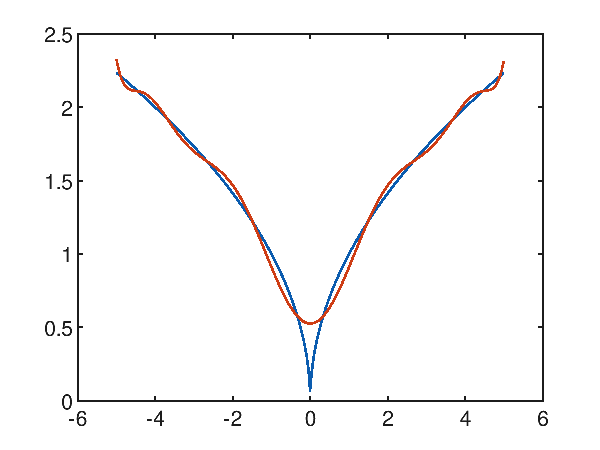

Give the synthetic dataset generated with the following function $f(x)=\sqrt{|x|}$ within the interval $[-5, 5]$, which has a cusp in the origin; Polynomial regression has difficulty approximating the cusp as can be seen from the following graph showing a tenth degree polynomial that attempts to approximate the objective function:

and evidently it is observed that around the cusp the approximation is not successful; on the whole interval the mean quadratic error is however interesting and is worth 0.004819185301219476.

Even trying a grade 20 polynomial:

the approximation around the cusp is improved but it is not possible to solve it; over the whole interval the mean square error is however considerably low and is worth 0.0014078917181083847.

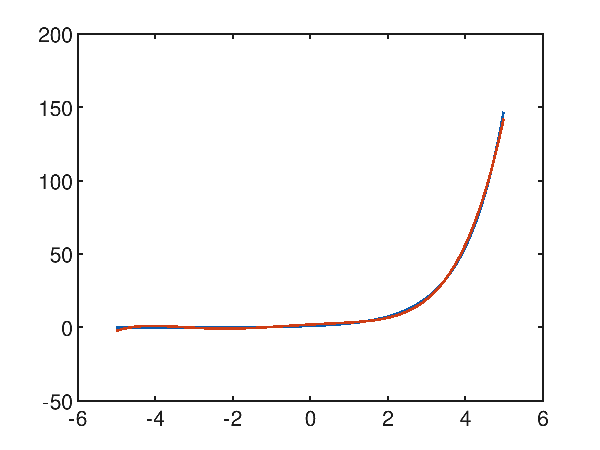

Case study of the approximation of an exponential trait

Even if the exponential function is not approximable with a polynomial, contrary to what one might think, on a limited stretch the approximation reaches a great level of accuracy. Given the exponential function $f(x)=e^x$ within the interval $[-5, 5]$, Polynomial regression with a fifth degree polynomial is already acceptable, with a mean square error of 0.9961909800481012, as the graph shows:

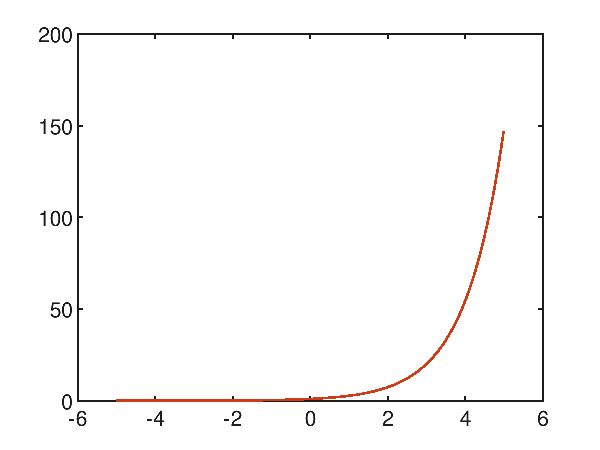

Using a tenth degree polynomial, the approximation becomes very accurate, with a mean square error of 1.6342296507040001E-06:

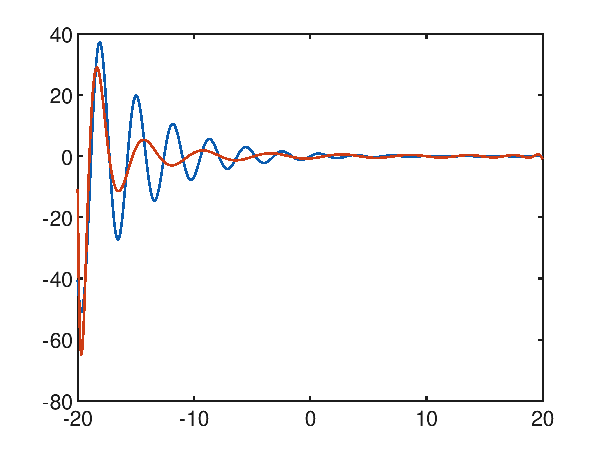

A difficult case study: the dampened sinusoid

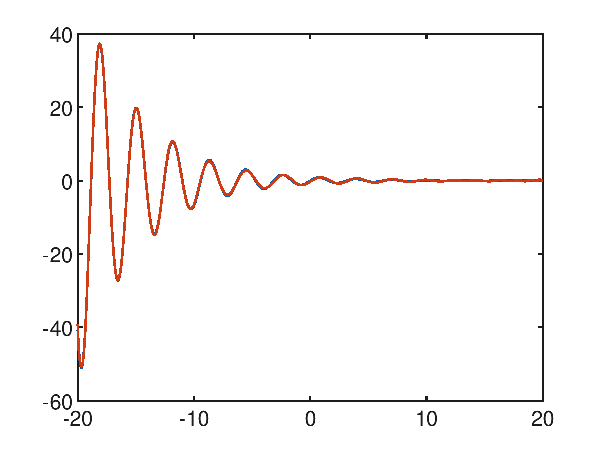

The function $f(x)=\frac{\sin 2x}{e^\frac{x}{5}}$, which is a kind of dampened sinusoid, within the interval $[-20, 20]$ does not seem easy to approximate because of the many local minima and maxima present; for the above we immediately try to approximate with a high degree polynomial, for example a twentieth degree; from the following graph:

note that grade 20 is not sufficient, and the mean square error is high and equal to 29.906606603488548.

Using a fortieth degree polynomial, the approximation becomes very accurate, with a significantly lower mean square error of 0.03318409290980062:

Download of the complete code

The complete code is available at GitHub.

These materials are distributed under MIT license; feel free to use, share, fork and adapt these materials as you see fit.

Also please feel free to submit pull-requests and bug-reports to this GitHub repository or contact me on my social media channels available on the top right corner of this page.